Pre-Requisites

This is RPM based Installation.

- Determine the number of nodes you want to have in the Openshift cluster.

- Bring up the required number of EC2 instances using AWS Management Console.

- Determine which nodes among them is the Master Node, Infra Node(s) and Compute Node(s).

The example below shows 1 Master node, 2 Compute nodes and 1 Infra node.

Installation Steps

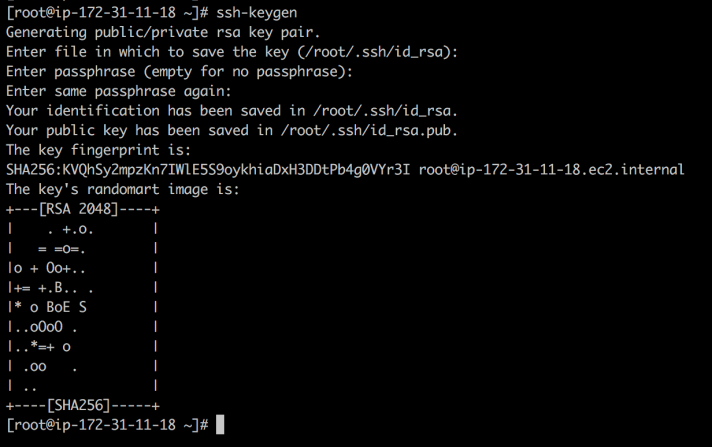

Step 1: Exchange keys between the master nodes and the other nodes

- Login to the Master node as root user

- Generate SSH Key on Master node using the command below:

ssh-keygen

- Copy the contents of the public key generated on the Master Node

cat /root/.ssh/id_rsa.pub

On All Nodes:

Copy-paste the content of the public key to the end of the file ~/.ssh/authorized_keys on all the nodes in the cluster including the Master.

vi ~/.ssh/authorized_keys

- SSH from the Master node into each of the nodes as root user and ensure that you enter “Yes” as shown below (This is done to avoid any prompting during the actual Openshift installation)

Step 2: Install Required Packages

Perform the following steps on all the nodes (Master, Infra, Compute):

- Install required packages on all nodes using yum

yum -y install wget git net-tools bind-utils yum-utils iptables-services bridge-utils bash-completion kexec-tools sos psacct yum -y update yum -y install docker-1.13.1

- Install RPM-based-Installation Packages:

yum -y install https://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm sed -i -e "s/^enabled=1/enabled=0/" /etc/yum.repos.d/epel.repo yum -y --enablerepo=epel install ansible pyOpenSSL

Step 3: Docker Storage setup

Perform the following steps on all the nodes (Master, Infra, Compute):

- Create EC2 volumes (for ALL nodes including the master) in the following way:

- Attach the volumes to the corresponding nodes:

- The command

lsblkshows that the EC2 volumes are successfully attached to the nodes. For example:

- On all the nodes, in the file

/etc/sysconfig/docker-storage-setup, set DEVS to the path of the block device attached (xvdf).

cat <<EOF > /etc/sysconfig/docker-storage-setup DEVS=/dev/xvdf VG=docker-vg EOF

- Execute the command to setup docker storage:

docker-storage-setup

- Execute

lvscommand. The output should look like the below:

- Set docker option using the following command on all nodes:

sudo sed -i '/OPTIONS=.*/c\OPTIONS="--selinux-enabled --insecure-registry 172.30.0.0/16"' /etc/sysconfig/docker

Step 4: Preparing the Ansible inventory file

Perform the following steps on the Master Node:

- Modify the file:

vi /etc/ansible/hosts

- Enter the content:

# Create an OSEv3 group that contains the masters, nodes, and etcd groups [OSEv3:children] masters nodes etcd # Set variables common for all OSEv3 hosts [OSEv3:vars] # SSH user, this user should allow ssh based auth without requiring a password ansible_ssh_user=root # Deployment type: origin or openshift-enterprise openshift_deployment_type=origin # Resolvable domain (for testing you can use external ip of the master node) openshift_master_default_subdomain=54.164.5.171.nip.io openshift_hosted_manage_registry=true openshift_hosted_manage_router=true openshift_router_selector='node-role.kubernetes.io/infra=true' openshift_registry_selector='node-role.kubernetes.io/infra=true' openshift_master_api_port=443 # External ip of the master node openshift_master_cluster_hostname=54.164.5.171.nip.io # External ip of the master node openshift_master_cluster_public_hostname=54.164.5.171.nip.io openshift_master_console_port=443 openshift_docker_insecure_registries=172.30.0.0/16 openshift_master_identity_providers=[{'name': 'htpasswd_auth', 'login': 'true', 'challenge': 'true', 'kind': 'HTPasswdPasswordIdentityProvider'}] # host group for masters [masters] 54.164.5.171 # host group for etcd [etcd] 54.164.5.171 # host group for nodes, includes region info [nodes] 54.164.5.171 openshift_node_group_name='node-config-master' 52.90.165.132 openshift_node_group_name='node-config-compute' 54.86.70.56 openshift_node_group_name='node-config-compute' 18.208.130.47 openshift_node_group_name='node-config-infra'

Step 5: Running Openshift-Ansible Playbook

- Clone the Openshift-Ansible repo:

git clone https://github.com/openshift/openshift-ansible cd openshift-ansible git checkout release-3.10

- Run the prequisites.yml playbook. This must be run only once before deploying a new cluster.

ansible-playbook -i /etc/ansible/hosts playbooks/prerequisites.yml

- Run the

deploy_cluster.ymlplaybook to initiate the cluster installation:

ansible-playbook -i /etc/ansible/hosts playbooks/deploy_cluster.yml

Step 6: Validating the Environment

- Verify the Master, Compute and Infra nodes using the command below and ensure that they are in Ready status:

kubectl get nodes

- Once the installation is completed, ensure that the status of the system pods is healthy using the command:

kubectl get pods --namespace=kube-system

Ensure that the system pods are in Running status and verify for RESTARTS.

- Verify the Openshift version using the command:

oc version

Note that the oc version command shows the Openshift Console Url.

Step 7: Creating a user

- Create a user “developer” using the command:

oc create user developer

- Set the password of the “developer” user using the command:

htpasswd -c <Identity provider file> developer

The path of the Identity provider file “htpasswd” is mentioned in /etc/origin/master/master-config.yaml

For example:

htpasswd -c /etc/origin/master/htpasswd developer

Step 8: Logging into Openshift UI

- Login into the Openshift console using the url:

https://54.164.5.171.nip.io:443

Note: This url can be obtained from the output of oc version command.

Disclaimer: All data and information provided on this site is for informational and learning purposes only. cloudliftandshift.com makes no representations as to accuracy, completeness, currentness, suitability, or validity of any information on this site and will not be liable for any errors, issues, or any losses, damages arising from its display or use. This is a personal weblog. The opinions expressed here represent my own and not those of anyone.

Disclaimer: All data and information provided on this site is for informational and learning purposes only. cloudliftandshift.com makes no representations as to accuracy, completeness, currentness, suitability, or validity of any information on this site and will not be liable for any errors, issues, or any losses, damages arising from its display or use. This is a personal weblog. The opinions expressed here represent my own and not those of anyone.

Things to note:

1. You have to install ansible version 2.4.3.0 – centos 7 on aws comes with latest ansible version installed – you have to install pip and use pip to install – pip install ansible==2.4.3.0

2. You have to add id_rsa.pub to master node itself – master has to be able to ssh to itself

3. You have to add following variables to /etc/ansible/hosts:

openshift_version=3.10

openshift_release=’3.10′

openshift_pkg_version=-3.10.0

openshift_image_tag=’v3.10′

4. You have to enable testing repositories for yum in all nodes:

cat > /etc/yum.repos.d/CentOS-OpenShift-Origin-CBS.repo <<EOF

[centos-openshift-origin-testing-cbs]

name=CentOS OpenShift Origin Testing CBS

baseurl=https://cbs.centos.org/repos/paas7-openshift-origin310-testing/x86_64/os/

enabled=1

gpgcheck=0

gpgkey=file:///etc/pki/rpm-gpg/openshift-ansible-CentOS-SIG-PaaS

EOF

5. You have to make traffic from Anywhere reachable to all ports on all AWS instances – security groups need to be edited for this

6. You have to run docker on all nodes – service docker start

7. You might run into some validation failures when you run openshift installation – add openshift_disable_check=disk_availability,docker_image_availability,docker_storage to ansible command or hosts file

LikeLike